NB: I initally wrote this with the goal of submitting to

SlateStarCodex however things are on hold

with that site so I thought I would just post here instead.

As I get older, I find that I am less willing to tolerate bad books. In

the past, out of a strange sense of guilt, I would force myself to keep

reading books even though I did not enjoy them. Nowadays, if I am

starting to get bored with a book I will stop reading as early as

possible and move on to other books. The Great

Influenza by John M.

Berry challenged this mantra immensely.

This was a book I had heard quite a bit about over the past few years

and had long been interested in reading it. I have a background in

public health, attended Johns Hopkins University and I wanted to learn

more about influenza outbreaks - all of which made me excited to finally

sit down and read this book.

This book starts off by making two compelling arguments - the first is

that the US medical education system was incredibly weak in 1918 and

most medical doctors had no real expertise or training upon graduation

and the second argument is that the outbreak began at an army camp in Kansas.

Both of these arguments are methodically presented with extensive

background by the author. About halfway through the book, as the

influenza epidemic continues to grow larger, the author abandons both of

these arguments and shifts to more of a focus on how different

communities and the US Government responded to the outbreak. The author

argues that the Johns Hopkins School of Medicine was intented to improve

medical education in the US and was based on the medical education system in German Universities. Many of the

early founders of the Johns Hopkins School of Medicine are extensively

profiled, only to completely disappear from the book entirely over the

course of the book. This is not uncommon, many

individuals are introduced, given a thorough background and then never

mentioned again.

In the Afterword, the author says he had hoped this book would take only

1-2 years to complete and it ended up taking him seven years to

complete. This is evident as there are many examples of the author

describing events and people in excessive detail only for the event or

person never to never be mentioned again. For example “Cincinnati’s public health agencies had examined 7,058

influenza victims since the epidemic had ended and found that 5,264

needed some medical assistance; 643 of them had heart problems, and an

extraordinary number of prominent citizens who had influenza had died

suddenly early in 1919.” (p. 392) This is the first time in the book

that Cincinnati is mentioned in the book and it is unclear what exactly

these statistical counts add to the narrative as Cincinnati is never

mentioned again.

This book is sorely in need of an editor to prune some of the

superfluous text. For example:

“It also seemed - although this was not scientifically established -

that those who went to bed the earliest, stayed there the longest, and

had the best care survived at the highest rates. Those findings meant of

course that the poor died in larger numbers than the rich.” (p. 408)

Some basic run on sentences:

“Ten days, two weeks, sometimes even longer than two weeks after the

initial attack by the virus, after victims had felt better, after

recovery had seemed to begin, victims were suddenly getting seriously

ill again. And they were dying.” (p. 317)

Reading page after page of this is exhausting.

This is not to say the book is all bad. There are some interesting

parallels between today and the 1918 outbreak that are worth exploring.

For example in 1918, President Wilson did not mention the

outbreak at all in public and very rarely if at all in private with his

staff. The US Surgeon General at the time, Rupert Blue, was extremely slow

to even ask for influenza infection counts and instead focused on

extolling patriotism by the virtues of Liberty Loans. In Phoenix and

Philadelphia, “Citizens Committees” were created by private citizen

groups who took it upon themselves to act to enforce quarantine and sanitation ordinances.

Finally, I was cautiously optimistic about the Afterword,

written ten years after publication in 2018, and I hoped it would be a

breath of fresh air. Sadly it is not. The author rants in the Afterword by claiming that wearing face masks is useless and that the only thing

that might get society through a similar epidemic is belief in our

elected leaders.

In many ways, reading this book is like performing a science experiment

in the sense that there is an interesting story present but to get to it one must sift through a great deal of noise. The book as a whole was moderately

interesting but far, far too long. For additional background I would

also suggest reading the excellent Wikipedia page about the 1918

Flu instead.

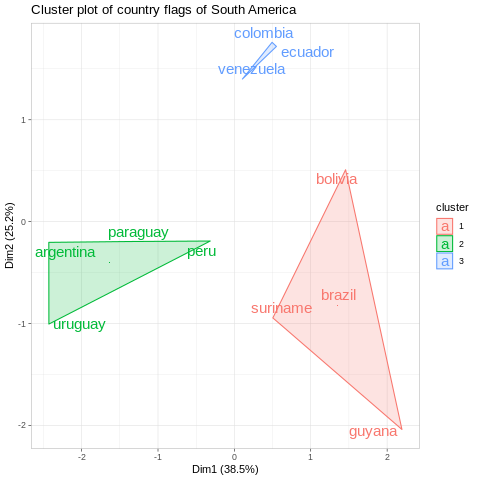

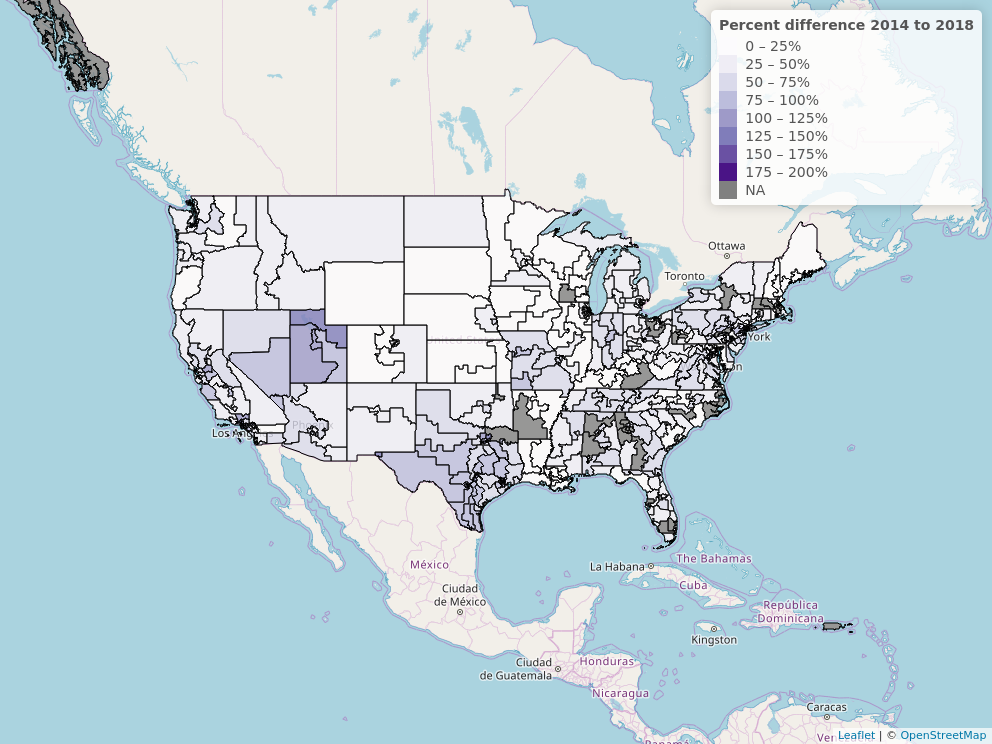

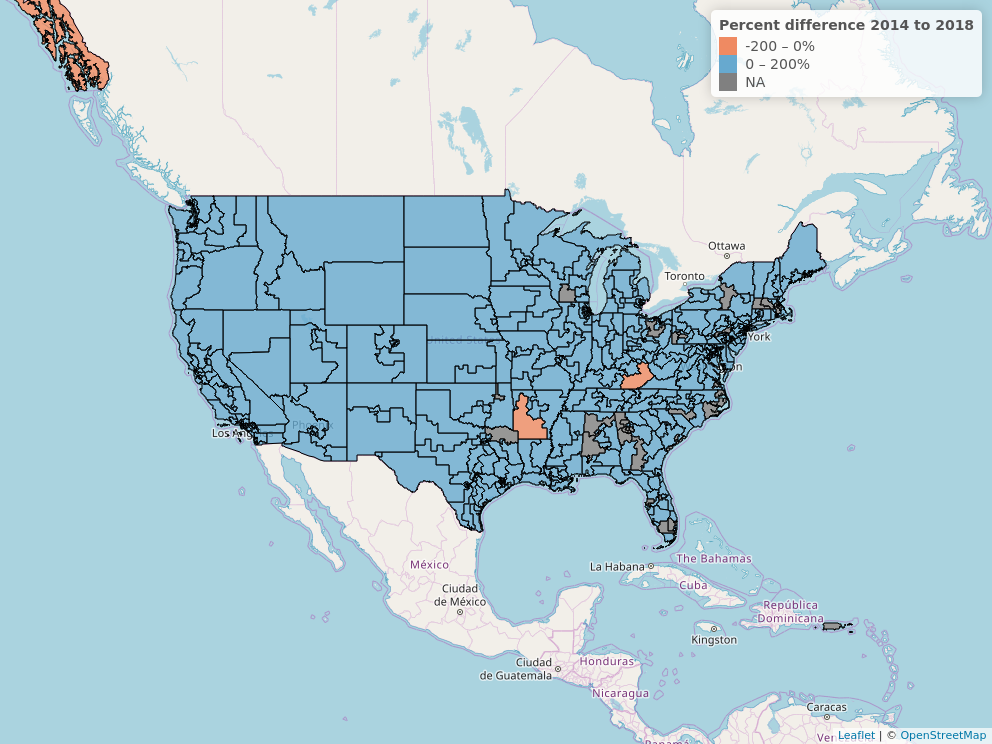

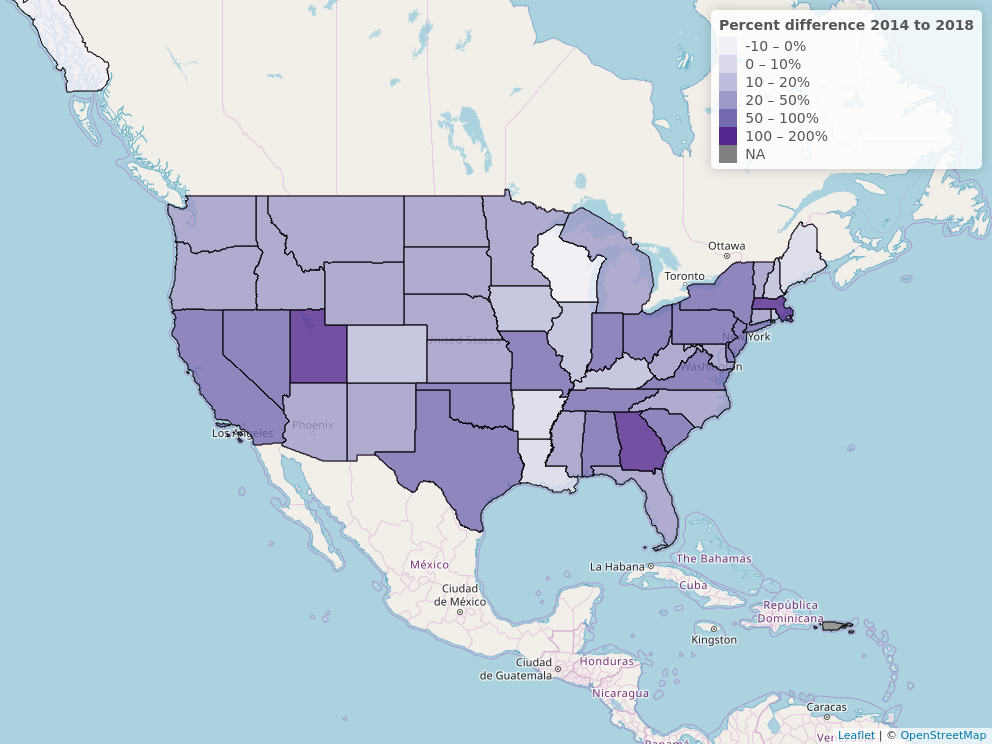

Explorable version of this map

Explorable version of this map

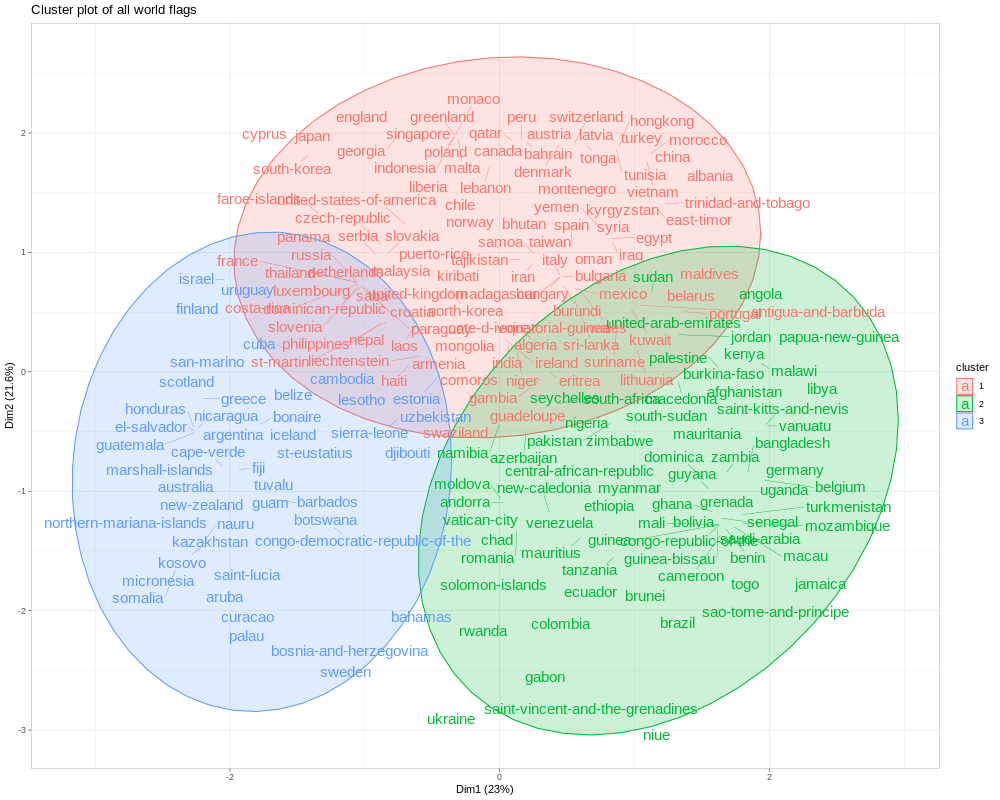

Explorable version of this map

Explorable version of this map